AI-based threats are expected to grow exponentially. The main weakness on the defender side is no longer coming up with good detection ideas, but turning those ideas into production rules quickly enough and at a sufficient scale.

AI-Native Malware Will Outpace Traditional SIEMs Without Automated Rule Deployment

Future malware families are likely to embed small LLMs or similar models directly into their code. This enables behavior that is very hard for traditional defenses to handle:

- Self-modifying code that keeps changing to avoid signatures.

- Context-aware evasion, where the malware “looks” at local logs, running processes, and security tools and adapts its tactics on the fly.

- Autonomous “AI ransomware agents” that call external platforms for instructions, fetch new payloads, negotiate ransom, and then redeploy in a different form.

Malware starts to behave less like a static binary and more like a flexible service that learns and iterates inside each victim environment.

Most SIEM setups are not designed for this world. Even leading platforms usually support a few hundred rules at maх. That is not enough to cover the volume and variety of AI-driven techniques across large, complex estates. In practice, serious coverage means thousands of active rules mapped to specific log sources, assets, and use cases.

Here, the hard limit is SOC capacity. Every rule has a cost: tuning, false positive handling, documentation, and long-term maintenance. And to keep the workload under control, teams disable or, more often, never onboard a significant part of the potential detection content.

Switching off a rule that is already in monitoring means explicitly taking responsibility for removing a layer of defense, so with limited capacity, it often feels safer to block new rules than to retire existing ones.

For years, the main concern has been alert fatigue – when there are too many alerts for too few analysts. In an AI-native threat landscape, another problem becomes more important: coverage gaps. The most dangerous attack is the one that never triggers an alert because the required rule was never written, never approved, or never deployed.

This shifts the role of SOC leadership. The focus moves from micromanaging individual rules to managing the overall detection portfolio:

- Which behaviors and assets are covered?

- Which blind spots are accepted, and why?

- How fast can the rule set change when a new technique, exploit, or campaign appears?

Traditional processes make this even harder. Manual QA, slow change control, and ticket-driven deployments can stretch the time from “we know how to detect this” to “this rule is live in production” into days or weeks. AI-driven campaigns can adapt within hours.

To close this gap, SOC operations will need to become AI-assisted themselves:

- AI-supported rule generation and conversion from threat reports, hunting queries, and research into ready-to-deploy rules across multiple query languages.

- Automated coverage mapping against frameworks like MITRE ATT&CK and against real telemetry (streams, topics, indices, log sources) to see what is actually monitored.

- Intelligent prioritization of which rules to enable, silence, or tune based on risk, business criticality, and observed impact.

- Tight integration with real-time event streaming platforms, so new rules can be tested, rolled out, and rolled back safely across very large volumes of data.

Without this level of automation and streaming-first design, SIEM becomes a bottleneck. AI-native threats will not wait for weekly change windows; detection intelligence and rule deployment must operate at streaming speed.

AI-Native Detection Intelligence Will Become the New Standard

By 2026, cybersecurity vendors will be judged on how deeply AI is embedded into their detection lifecycle, not on whether they simply “use AI” as a marketing label. Enterprise buyers, especially at Fortune 100 scale, will treat AI-native detection intelligence as a requirement.

Concretely, large customers will demand:

- Self-managed, private LLMs that do not leak proprietary telemetry or logic to public clouds.

- GPU-efficient models optimized specifically for detection intelligence workloads, not generic chat or content tasks.

- Clear guarantees that data stays within well-defined trust boundaries.

On the product side, AI will touch every part of the detection stack:

- AI-generated detection rules aligned with frameworks like MITRE ATT&CK (already in place at SOC Prime).

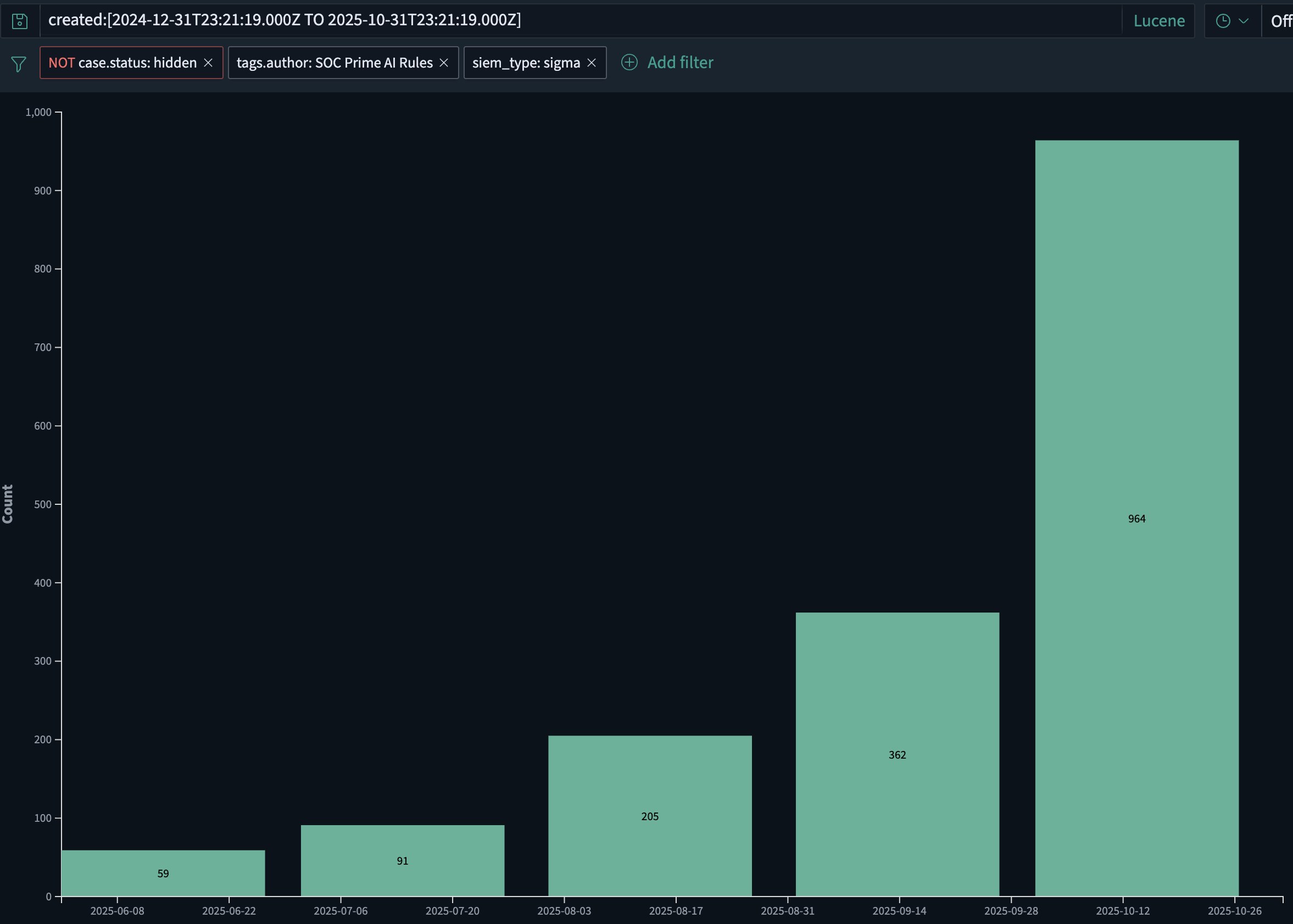

- At SOC Prime alone, the volume of AI-generated detection rules has been growing at roughly 2x month over month, increasing from about 60 rules in June 2025 to nearly 1,000 in October 2025. This growth is driven both by faster deployment of new rules and by emerging AI-powered malware that require AI to fight AI.

- AI-driven enrichment, tuning, and log-source adaptation so that rules stay relevant as telemetry changes.

- AI-assisted retrospective investigations that can automatically replay new logic over historical data.

- AI-based prioritization of threat content based on customer stack, geography, sector, and risk profile.

In other words, AI becomes part of the detection “factory”: how rules are produced, maintained, and retired across many environments. By 2026, AI-supported detection intelligence will no longer be a value-add feature; it will be the baseline expectation for serious security platforms.

Foundation Model Providers Will Own a New Security Layer – and Need LLM Firewalls

As large language models become part of the core infrastructure for software development, operations, and support, foundation model providers inevitably join the cybersecurity responsibility chain. When their models are used to generate phishing campaigns, malware, or exploit code at scale, pushing all responsibility to end-user organizations is no longer realistic.

Foundation model providers will be expected to detect and limit clearly malicious use cases and to control how their APIs are used, while still allowing legitimate security testing and research. This includes:

- Screening prompts for obvious signs of malicious intent, such as step-by-step instructions for gaining initial access, escalating privileges, moving laterally, or exfiltrating data.

- Watching for suspicious usage patterns across tenants such as automated loops, infrastructure-like behavior, or repeated generation of offensive security content.

- Applying graduated responses: rate limiting, extra verification, human review, or hard blocking when abuse is obvious.

Generic “don’t help with hacking” filters are not enough. A dedicated security layer for LLM traffic is needed – an LLM firewall.

An LLM firewall sits between applications and the model and focuses on cyber risk:

- It performs semantic inspection of prompts and outputs for indicators of attack planning and execution.

- It enforces policy: what is allowed, what must be masked or transformed, and what must be blocked entirely.

- It produces security telemetry that can be fed into SIEM, SOAR, and streaming analytics for investigation and correlation with other signals.

Products like AI DR Bastion are designed with this role in mind: a protective layer around LLM usage that specializes in detecting and stopping offensive cyber use.

This type of control can help:

- Enterprises that consume LLMs, by reducing the risk that internal users or applications can easily weaponize models.

- Model and platform providers, by giving them a concrete mechanism to show that they are actively controlling abuse of their APIs.

As LLMs are embedded into CI/CD pipelines, developer assistants, customer support flows, incident response tools, and even malware itself, the boundary between “AI security” and “application security” disappears. Model providers, platform teams, and security organizations will share responsibility for how these systems are used.

In this architecture, LLM firewalls become a standard layer, similar to how WAFs and API gateways are standard today – working alongside SIEM and real-time streaming analytics to ensure that the same AI capabilities that accelerate business outcomes do not become a force multiplier for attackers.

The “Shift-Left Detection” Era Will Begin

By 2026, many enterprise security programs will recognize that pushing all telemetry into a SIEM first, and only then running detection, is both financially unsustainable and operationally too slow.

The next-generation stack will move detection logic closer to where data is produced and transported:

- Directly in event brokers, ETL pipelines, and streaming platforms such as Confluent Kafka.

- As part of the data fabric, not only at the end of the pipeline.

The result is a “shift-left detection” model:

- More than half of large enterprises are expected to start evaluating or piloting architectures where real-time detection runs in the streaming layer.

- The SIEM evolves toward a compliance, investigation, and retention layer, while first-line detection logic executes on the data in motion.

- Vendor-neutral, high-performance detection rules that can run at streaming scale become a key differentiator.

In this model, threat detection content is no longer tied to a single SIEM engine. Rules and analytics need to be:

- Expressed in formats that can execute on streaming platforms and in multiple backends.

- Managed as a shared catalog that can be pushed “before the SIEM” and still traced, audited, and tuned over time.

SOC Prime’s product direction for 2026 is aligned with this shift: building a line-speed pipeline that runs before the SIEM and integrates directly with streaming platforms. This makes it possible to combine:

- AI-native detection intelligence at scale,

- Real-time execution on event streams, and

- Downstream correlation, retention, and compliance in SIEM and data platforms.

Taken together, AI-native malware, LLM abuse, AI-driven detection intelligence, and shift-left detection architectures define the next wave of cyber threats – and the shape of the defenses needed to meet them.